We live in a terrorized age. At the dawn of the 21st century, the world is not only coping with the constant threat of violent extremism, we face global warming, potential pandemic diseases, economic uncertainty, Middle Eastern conflicts, the debilitating consequences of partisan politics, and so on. The list grows each time you click on the news. Fear seems to be infecting the collective consciousness like a virus, resulting in a culture of anxiety and a rising tide of helplessness, despair, and anger. In the U.S., symptoms of this chronic unease can be seen in the proliferation of apocalyptic paranoia and conspiracy theories coupled with the record sales of both weapons and tickets for Hollywood’s superhero blockbusters, fables that reflect post-9/11 fears and the desire for a hero to sweep in and save us.

That’s why I want to take the time to analyze some complex but important concepts like the sublime, the Gothic, and the uncanny, ideas which, I believe, can help people get a rational grip on the forces that terrorize the soul. Let’s begin with the sublime.

The word is Latin in origin and means rising up to meet a threshold. To Enlightenment thinkers, it referred to those experiences that challenged or transcended the limits of thought, to overwhelming forces that left humans feeling vulnerable and in need of paternal protection. Edmund Burke, one of the great theorists of the sublime, distinguished this feeling from the experience of beauty. The beautiful is tame, pleasant. It comes from the recognition of order, the harmony of symmetrical form, as in the appreciation of a flower or a healthy human body. You can behold them without being unnerved, without feeling subtly terrorized. Beautiful things speak of a universe with intrinsic meaning, tucking the mind into a world that is hospitable to human endeavors. Contrast this with the awe and astonishment one feels when contemplating the dimensions of a starry sky or a rugged, mist-wreathed mountain. From a distance, of course, they can appear ‘beautiful,’ but, as Immanuel Kant points out in Observations on the Feeling of the Beautiful and Sublime, it is a different kind of pleasure because it contains a “certain dread, or melancholy, in some cases merely the quiet wonder; and in still others with a beauty completely pervading a sublime plan.”

This description captures the ambivalence in sublime experiences, moments where we are at once paradoxically terrified and fascinated by the same thing. It is important here to distinguish ‘terror’ from ‘horror.’ Terror is the experience of danger at a safe distance, the potential of a threat, as opposed to horror, which refers to imminent dangers that actually threaten our existence. If I’m standing on the shore, staring out across a vast, breathtaking sea, entranced by the hissing surf, terror is the goose-pimply, weirded-out feeling I get while contemplating the dimensions and unfathomable power before me. Horror would be what I feel if a tsunami reared up and came crashing in. There’s nothing sublime in horror. It’s too intense to allow for the odd mix of pleasure and fear, no gap in the feeling for some kind of deeper revelation to emerge.

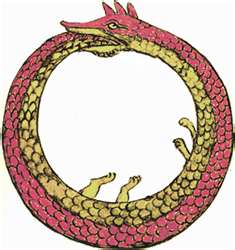

While Burke located the power of the sublime in the external world, in the recognition of an authority ‘out there,’ Kant has a more sophisticated take. Without digging too deeply into the jargon-laden minutia of his critique, suffice it to say that Kant ‘subjectivizes’ the concept, locating the sublime in the mind itself. I interpret Kant as pointing to a recursive, self-referential quality in the heart of the sublime, an openness that stimulates our imagination in profound ways. When contemplating stormy seas and dark skies, we experience our both nervous system’s anxious reaction to the environment along with a weird sense of wonder and awe. Beneath this thrill, however, is a humbling sense of futility and isolation in the face of the Infinite, in the awesome cycles that evaporate seas, crush mountains, and dissolve stars without a care in the cosmos as to any ‘meaning’ they may have to us. Rising up to the threshold of consciousness is the haunting suspicion that the universe is a harsh place devoid of a predetermined purpose that validates its existence. These contradictory feelings give rise to a self-awareness of the ambivalence itself, allowing ‘meta-cognitive’ processes to emerge. This is the mind’s means of understanding the fissure and trying to close the gap in a meaningful way.

Furthermore, by experiencing forms and magnitudes that stagger and disturb the imagination, the mind can actually grasp its own liberation from the deterministic workings of nature, from the blind mechanisms of a clockwork universe. In his Critique of Judgment, Kant says “the irresistibility of [nature’s] power certainly makes us, considered as natural beings, recognize our physical powerlessness, but at the same time it reveals a capacity for judging ourselves as independent of nature and a superiority over nature…whereby the humanity in our person remains undemeaned even though the human being must submit to that dominion.” One is now thinking about their own thinking, after all, reflecting upon the complexity of the subject-object feedback loop, which, I assert, is the very dynamic that makes self-consciousness and freedom possible in the first place. We can’t feel terrorized by life’s machinations if we aren’t somehow psychologically distant from them, and this gap entails our ability to think intelligently and make decisions about how best to react to our feelings.

I think this is in line with Kant’s claim that the sublime is symbolic of our moral freedom—an aesthetic validation of our ethical intentions and existential purposes over and above our biological inclinations and physical limitations. We are autonomous creatures who can trust our capacity to understand the cosmos and govern ourselves precisely because we are also capable of being terrorized by a universe that appears indifferent to our hopes and dreams. Seen in this light, the sublime is like a secularized burning bush, an enlightened version of God coming out of the whirlwind and parting seas. It is a more mature way of getting in touch with and listening to the divine, a reasonable basis for faith.

My faith is in the dawn of a post-Terrorized Age. What Kant’s critique of the sublime teaches me is that, paradoxically, we need to be terrorized in order to get there. The concept of the sublime allows us to reflect on our fears in order to resist their potentially debilitating, destructive effects. The antidote is in the poison, so to speak. The sublime elevates these feelings: the more sublime the terror, the freer you are, the more moral you can be. So, may you live in terrifying times.